These are completely different methods in machine learning. The fact that they both have the letter K in their name is a coincidence.

K-means is a clustering algorithm that tries to partition a set of points into K sets (clusters) such that the points in each cluster tend to be near each other. It is unsupervised because the points have no external classification.

The typical k-means problems are having n data points. We want to divide (partition) the n points in to K clusters so that the points in each cluster tend to be close to each other.

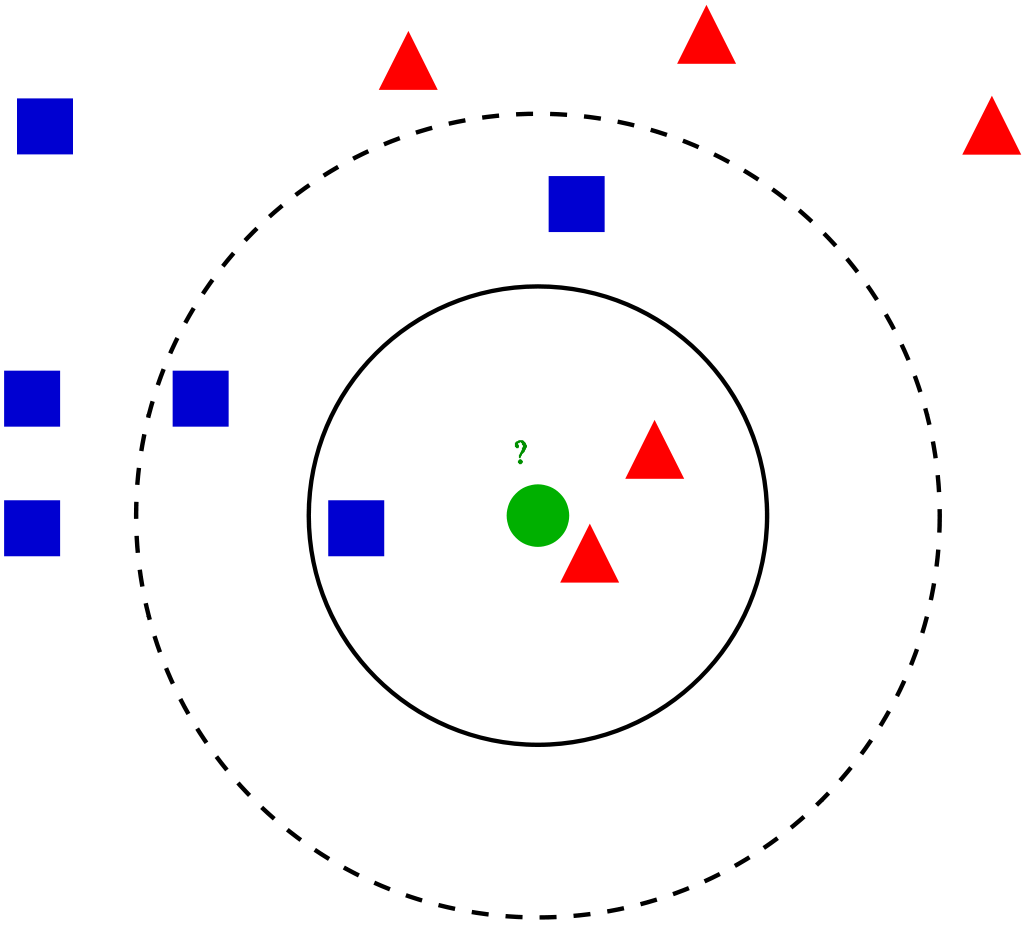

K-nearest neighbour (KNN) is a classification (or regression) algorithm that in order to determine the classification of a point, combines the classification of the K nearest points. It is supervised because you are trying to classify a point based on the known classification of other points.

The typical KNN problems are we already have K classes of n data points. If we have a new data point P, we would like to know which class should P belongs to.

KNN example with Scikit-learn

Defining dataset

Let’s first create your own dataset. Here you need two kinds of attributes or columns in your data: Feature and label. The reason for two type of column is “supervised nature of KNN algorithm”.

# Assigning features and label variables

# First Feature

weather=['Sunny','Sunny','Overcast','Rainy','Rainy','Rainy','Overcast','Sunny','Sunny', 'Rainy','Sunny','Overcast','Overcast','Rainy']

# Second Feature

temp=['Hot','Hot','Hot','Mild','Cool','Cool','Cool','Mild','Cool','Mild','Mild','Mild','Hot','Mild']

# Label or target variable

play=['No','No','Yes','Yes','Yes','No','Yes','No','Yes','Yes','Yes','Yes','Yes','No']In this dataset, you have two features (weather and temperature) and one label (play).

Encoding data columns

Various machine learning algorithms require numerical input data, so you need to represent categorical columns in a numerical column.

In order to encode this data, you could map each value to a number. e.g. Overcast:0, Rainy:1, and Sunny:2.

# Import LabelEncoder

from sklearn import preprocessing

#creating labelEncoder

le = preprocessing.LabelEncoder()

# Converting string labels into numbers.

weather_encoded=le.fit_transform(weather)

print(weather_encoded)[2 2 0 1 1 1 0 2 2 1 2 0 0 1]

Here, you imported preprocessing module and created Label Encoder object. Using this LabelEncoder object, you can fit and transform “weather” column into the numeric column.

Similarly, you can encode temperature and label into numeric columns.

# converting string labels into numbers

temp_encoded=le.fit_transform(temp)

label=le.fit_transform(play)Combining Features

Here, you will combine multiple columns or features into a single set of data using “zip” function

#combinig weather and temp into single listof tuples

features=list(zip(weather_encoded,temp_encoded))Generating Model

Let’s build KNN classifier model.

First, import the KNeighborsClassifier module and create KNN classifier object by passing argument number of neighbors in KNeighborsClassifier() function.

Then, fit your model on the train set using fit() and perform prediction on the test set using predict().

from sklearn.neighbors import KNeighborsClassifier

model = KNeighborsClassifier(n_neighbors=3)

# Train the model using the training sets

model.fit(features,label)

#Predict Output

predicted= model.predict([[0,2]]) # 0:Overcast, 2:Mild

print(predicted)[1]

In the above example, you have given input [0,2], where 0 means Overcast weather and 2 means Mild temperature. Model predicts [1], which means play.

Till now, you have learned How to create KNN classifier for two in python using scikit-learn. In another post you will learn about KNN with multiple classes.